刚刚在Windows10 + Visual Studio 2015 环境下配置了Hadoop Single Node Cluster,下面是主要的步骤.

Build Hadoop Core

这部分主要参照Hadoop 2.7.1 for Windows 10 binary build with Visual Studio 2015 (unofficial)

环境准备

D盘下新建目录D:\Hadoop 用来存放所有的Hadoop配置相关文件

安装Java

下载地址: Java SE Development Kit 8u73 Windows x64

安装地址: D:\Hadoop\jdk1.8.0_73

将环境变量 JAVA_HOME 设置为jdk的位置 D:\Hadoop\jdk1.8.0_73

Getting Hadoop sources

下载地址: hadoop-2.7.2-src.tar.gz

解压地址: D:\Hadoop\hadoop-2.7.2-src

安装其他依赖

打开 D:\Hadoop\hadoop-2.7.2-src\BUILDING.txt ,里面列出了其他的Requirements:

- Maven 3.0 or later

- ProtocolBuffer 2.5.0

- CMake 2.6 or newer

- zlib headers

- Unix command-line tools from GnuWin32: sh, mkdir, rm, cp, tar, gzip. These tools must be present on your PATH.

Maven

下载地址: apache-maven-3.3.9-bin.tar.gz

解压地址: D:\Hadoop\apache-maven-3.3.9

将 D:\Hadoop\apache-maven-3.3.9\bin 添加到PATH环境变量中

ProtocolBuffer 2.5.0

下载地址: protocol-2.5.0-win32.zip

解压地址: D:\Hadoop\protoc-2.5.0-win32

将 D:\Hadoop\protoc-2.5.0-win32 添加到PATH环境变量中

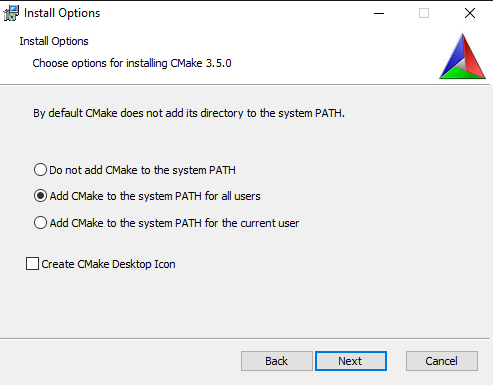

CMake 3.4.3

下载地址: cmake-3.5.0-rc2-win32-x86.msi

安装时记得勾选添加到PATH环境变量

zlib headers

下载地址: zlib128-dll.zip

解压地址: D:\Hadoop\zlib128-dll

在环境变量中添加ZLIB_HOME,值为D:\Hadoop\zlib128-dll\include

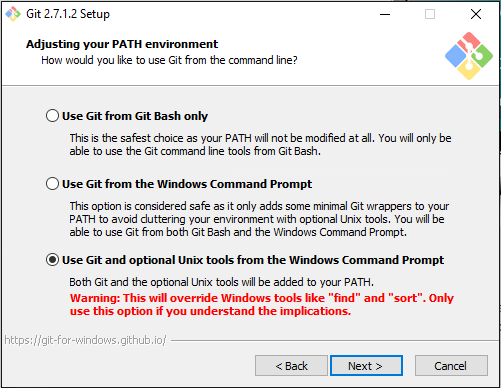

Unix command-line tools from

根据BUILDING.txt,这个tool可以在安装git的时候顺带安装

下载地址: git-2.7.1

安装的时候记得勾选 “Use Git and optional Unix tools from the Windows Command Prompt”

配置MSBuild

将C:\Windows\Microsoft.NET\Framework64\v4.0.30319添加到PATH环境变量中

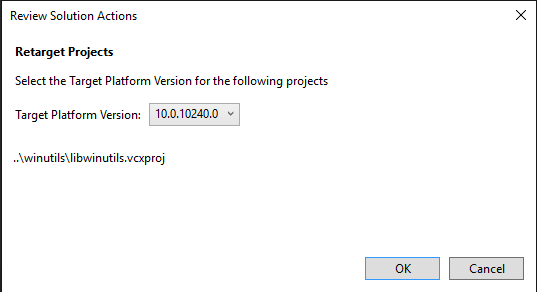

更新VS Project文件

用Visual Studio 2015打开下面两个solution,右键solution,选择Retarget Projects

- D:\Hadoop\hadoop-2.7.2-src\hadoop-common-project\hadoop-common\src\main\winutils\winutils.sln

- D:\Hadoop\hadoop-2.7.2-src\hadoop-common-project\hadoop-common\src\main\native\native.sln

更新编译选项

打开 D:\Hadoop\hadoop-2.7.2-src\hadoop-hdfs-project\hadoop-hdfs\pom.xml ,将下面这一行改为Visual Studio 2015的形式

修改前1

<condition property="generator" value="Visual Studio 10" else="Visual Studio 10 Win64">

修改后1

<condition property="generator" value="Visual Studio 10" else="Visual Studio 14 2015 Win64">

Build Package files

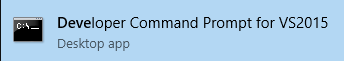

启动 Developer Command Prompt for VS2015

在 D:\Hadoop\hadoop-2.7.2-src 下执行下面的命令设置 Platform environment variable

1 | set Platform=x64 |

然后执行如下命令开始build

1 | mvn package -Pdist,native-win -DskipTests -Dtar |

build过程中如果出现 OutOfMemoryError,通过下面的命令 assign more memory,然后重新build

1 | set MAVEN_OPTS=-Xmx512m -XX:MaxPermSize=128m |

如果出现jni.h找不到的错误,可以将下面三个文件复制到 C:\Program Files (x86)\Microsoft Visual Studio 14.0\VC\include 下

1 | D:\Hadoop\jdk1.8.0_73\include\jni.h |

Copy Package files

Build成功后,将 D:\Hadoop\hadoop-2.7.2-src\hadoop-dist\target\ 下的hadoop-2.7.2文件夹复制到 D:\Hadoop\hadoop-2.7.2

Start a Single Node (pseudo-distributed) Cluster

接下来就可以参照Build and Install Hadoop 2.x or newer on Windows,配置Single Node Cluster,注意Command Prompt必须具有Admin权限,否则在执行yarn指令时会报错 “A required priviledge is not held by the client”

在IntelliJ IDEA中单机调试Hadoop程序

这部分内容可以参照HOW-TO: COMPILE AND DEBUG HADOOP APPLICATIONS WITH INTELLIJ IDEA IN WIDNOWS OS(64BIT)

注意的地方是WordCount例程中不要忘记下面这一行,否则Class not found

1 | job.setJarByClass(WordCount.class); |

Intellij IDEA生成Jar包

这部分内容参照Intellij IDEA 搭建Hadoop开发环境

- 选择菜单File->Project Structure,弹出Project Structure的设置对话框

- 选择左边的Artifacts后点击上方的“+”按钮

- 在弹出的框中选择jar->from moduls with dependencies..

- 选择要启动的类,然后 确定

- 应用之后,对话框消失。在IDEA选择菜单Build->Build Artifacts,选择Build或者Rebuild后即可生成,生成的jar文件位于工程项目目录的out/artifacts下

要注意的地方是修改args[0]和args[1],将原先WordCount中的下面两行

1 | FileInputFormat.addInputPath(job, new Path(args[0])); |

改为

FileInputFormat.addInputPath(job, new Path(args[1]));

FileOutputFormat.setOutputPath(job, new Path(args[2]));

原因可以参考这里org.apache.hadoop.mapred.FileAlreadyExistsException

Reference

- Build and Install Hadoop 2.x or newer on Windows

- Hadoop 2.7.1 for Windows 10 binary build with Visual Studio 2015 (unofficial)

- OutOfMemoryError

- HOW-TO: COMPILE AND DEBUG HADOOP APPLICATIONS WITH INTELLIJ IDEA IN WIDNOWS OS(64BIT)

- Intellij IDEA 搭建Hadoop开发环境

- org.apache.hadoop.mapred.FileAlreadyExistsException